With the release of XenDesktop 7.12 Citrix has introduced into FMA world Local Host Cache functionality. Since 2013 when XenDesktop 7.0 without LHC was released this feature was the most awaited change. Taking the opportunity that quite recently I started some test of XenDesktop 7.15 in my LAB I would like to write down my notes about local host cache. Let’s start from the beginning …

Update: Added detailed information about localDB import process

Function

The main function of Local Host Cache is to allow all users to connect/reconnect to all published resources during database outage. In FMA world, Local Host Cache functionality is the next step to successfully implement stable, truly highly-available XenApp and XenDesktop 7.15 infrastructure. The first solution to enable HA is connection leasing introduced in XenDesktop 7.6. For more details see my post: Connection Leasing. The implementation history is presented in the Table 1 below.

(*) Depends on the installation type.

The following table shows the Local Host Cache and connection leasing settings after a new XenApp or XenDesktop installation, and after an upgrade to XenApp or XenDesktop 7.12 (or later supported version).

LHC comparison: XenDesktop 7.15 vs XenApp 6.5

Although Local Host Cache implementation in XenDesktop 7.15 (to be more precise, from version 7.12) shares the name of the Local Host Cache feature in XenApp 6.x, there are significant differences which you should be aware of. My subjective pros and cons summary is the following:

Advantages:

- LHC is supported for on-premise and Citrix Cloud installations

- LHC implementation in XenDesktop 7.15 is more robust and immune to corruption

- Maintenance requirements are minimized, such as eliminating the need for periodic dsmaint commands

Disadvantages:

- Local Host Cache is supported for server-hosted applications and desktops, and static (assigned) desktops; it is not supported for pooled VDI desktops (created by MCS or PVS).

- No control on Secondary Broker election – election is done based on alphabetical list of FQDN names of registered Delivery Controllers. Election process is described in details below.

- Additional compute resources in the sizing for all Delivery Controllers must be included.

Local Host Cache vs Connection leasing – highlights

- Local Host Cache was introduced to replace Connection Leasing, which will be removed in the next releases !

- Local Host Cache supports more use cases than connection leasing.

- During outage mode, Local Host Cache requires more resources (CPU and memory) than connection leasing.

- During outage mode, only a single broker will handle VDA registrations and broker sessions.

- An election process decides which broker will be active during outage, but does not take into account broker resources.

- If any single broker in a zone would not be capable of handling all logons during normal operation, it won’t work well in outage mode.

- No site management is available during outage mode.

- A highly available SQL Server is still the recommended design.

- For intermittent database connectivity scenarios, it is still better to isolate the SQL Server and leave the site in outage mode until all underlying issues are fixed.

- There is a limit of 10 000 VDAs per zone.

- There is no 14-day limit.

- Pooled desktops are not supported in outage mode, in the default configuration.

In the overall assessment we would say that Citrix has achived one of the biggest milestones in XenDesktops 7.x releases. The current implementation is far from ideal solution but changes are going in the right direction. Additional improvements to LHC are still required to provide enterprise-wide high availablity feature for database outages.

How to turn it on ?

Status of HA options can be checked with powershell command: Get-BrokerSite. See the screenshot below:

To change the status of HA options you can use Set-BrokerSite command.

To enable Local Host Cache (and disable connection leasing), enter:

Set-BrokerSite -LocalHostCacheEnabled $true -ConnectionLeasingEnabled $false

To disable Local Host Cache (and enable connection leasing), enter:

Set-BrokerSite -LocalHostCacheEnabled $false -ConnectionLeasingEnabled $true

How does it work ?

Local Host Cache functionality in FMA world is build based on 3 core FMA services and MS SQL Express localDB:

- Citrix Broker Service – called also Principal Broker Service. In windows server operating system is represented as service as process BrokerService. In scope of local host cache functionality Principal Broker service is responsible for the following tasks:

- registration of all VDAs, including ongoing management from a Delivery Controller perspective

- brokers new and manages existing sessions, handles resource enumeration, the creation and verification of STA tickets, user validation, disconnected sessions etc

- monitoring existence of site database

- monitoring of changes in site database

- Citrix Config Synchronizer Service – In windows server operating system is represented as process ConfigSyncService. The main tasks served by this service are the following:

- when a configuration change in site database is detected, copy the content of site database to the High Availability Service/Secondary Broker Service

- provide the High Availability Service/Secondary Broker Service (s) with information on all other controllers within your Site (Primary Zone), including any additional Zones

- Citrix High Availability Service – called also Secondary Broker Service. In windows server operating system is represented as process HighAvailabilityService. The main task served by this service is to handle all new and existing connections/sessions during database outage.

- MS SQL Express LocalDB – dedicated SQL Express instance located on every controller used to store all site information data synchronised from Site database. Ony the secondary broker communicates with this database; you cannot use PowerShell cmdlets to change anything about this database. The LocalDB cannot be shared across Controllers.

Process flow during normal operations

- The principal broker (Citrix Broker Service) on a Controller accepts connection requests from StoreFront, and communicates with the Site database to connect users with VDAs that are registered with the Controller. In the background Broker is monitoring database status. A heartbeat message is exchanged between a Delivery Controller and the database every 20 seconds with a default timeout of 40 seconds.

- Every 2 minutes a check is made to determine whether changes have been made to the principal broker’s configuration. Those changes could have been initiated by PowerShell/Studio actions (such as changing a Delivery Group property) or system actions (such as machine assignments). It will not include information about who is connected to which server (Load Balancing), using what application (s) etc. referred as the current state of the Site/Farm

- If a change has been made since the last check, the principal broker uses the Citrix Config Synchronizer Service (CSS) to synchronize (copy) information to a secondary broker (Citrix High Availability Service) on the Controller.

- The secondary broker imports the data into a temporary database (HAImportDatabaseName) in Microsoft SQL Server Express LocalDB on the Controller.

- When import into temporary DB is successful, previous DB is removed and temporary DB is renamed to HADatabaseName. The LocalDB database is re-created each time synchronization occurs. The CSS ensures that the information in the secondary broker’s LocalDB database matches the information in the Site database. Correlated event ids:

- id 503 – CSS receives a config change

- id 504 – LocalDB update successfull

- id 505 – LocalDB update failure

- If no changes have occurred since the last check, no data is copied

Standard LHC process flow is presented in the figure below:

Figure 2 – LHC standard mode

Process flow during database outage

- The principal broker can no longer communicate with the Site database

- The principal broker stops listening for StoreFront and VDA information (marked with red X in the figure below). Correlated event ids: 1201, 3501

- The principal broker then instructs the secondary broker (High Availability Service) to start listening for and processing connection requests (marked with a red dashed line in the figure below). Correlated event ids: 2007, 2008

- Based on alphabetical list of FQDN names an election process starts to determine which controller takes over the secondary broker role. There can only be one secondary broker accepting connections during database outage. Correlated event id: 3504. The non-elected secondary brokers in the zone will actively reject incoming connection and VDA registration requests.

- While the secondary broker is handling connections, the principal broker continues to monitor the connection to the Site database

- As soon as a VDA communicates with Secondary Broker, a re-registration process is triggered (shown with red arrows for XML and VDA registration traffic in the figure below). During that process, the secondary broker also gets current session information about that VDA. Correlated event ids: 1002, 1014, 1017

- Connection is restored,

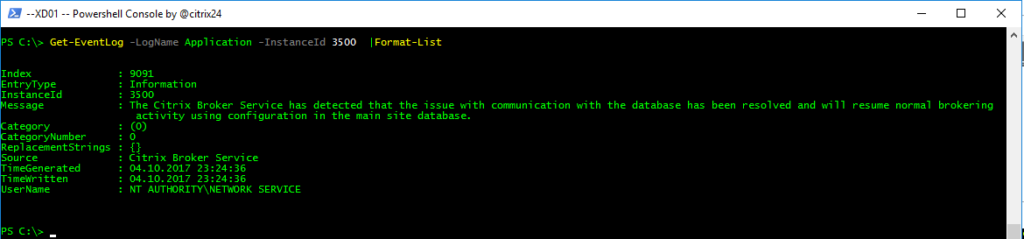

- The principal broker instructs the secondary broker to stop listening for connection information, and the principal broker resumes brokering operations. Correlated event ids: 1200-> 3503-> 3500, 3004-> 3000-> 1002

- The secondary broker removes all VDA registration info captured during the outage (these information are lost and are not synchronized to Site database) and resumes updating the LocalDB database with configuration changes received from the CSS.

- The next time a VDA communicates with the principal broker, a re-registration process is triggered.

LHC process flow during database outage is presented in the figure below:

Sites with multiple controllers and zones

As it was mentioned above Config Synchronizer Service updates the secondary broker with information about all Controllers in the site or zone. If your deployment does contain multiple zones, this action is done per each zone independently and affects all Controllers in every zone. Having that information, each secondary broker knows about all peer secondary brokers.

In the deployment with single zone configuration (or with multiple zones but controllers configured with single zone) election is done based on FQDN names of all configured controllers

In the deployment with multiple zones configured with delivery controllers election is done separately per each zone based on FQDN names of configured controllers.

SQL Express LocalDB

LocalDB is an instance of SQL Server Express that can create and open SQL Server databases. The local SQL Express database has been part of the XenApp/XenDesktop installation as of version 7.9. It is installed automatically when you install a new controller or upgrade a controller prior to version 7.9.

The binaries for SQL Express LocalDB are located in:

%ProgramFiles%\Microsoft SQL Server\120\LocalDB\.

The LHC database files are located in folder as:

C:\Windows\ServiceProfiles\NetworkService\HaDatabaseName.mdf.

C:\Windows\ServiceProfiles\NetworkService\HaDatabaseNamelog.ldf.

During every import process temporary database is created

C:\Windows\ServiceProfiles\NetworkService\HaImportDatabaseName.mdf.

C:\Windows\ServiceProfiles\NetworkService\HaImportDatabaseNamelog.ldf.

Local Host Cache database contains only static information, referred as the current state of the Site/Farm. In multizone scenario local host cache database in all zones contains exactly the same set of information.

The size comparison of top 20 biggest tables is shown in the figure below:

LocalDB is exclusively used by the secondary broker. PowerShell cmdlets or Citrix studio cannot be used to communicate with /update this database. The LocalDB cannot be shared across Controllers. Each controller has each own copy of Site database content.

Design considerations

The following must be considered when using local host cache:

- Elections – When the zones loses contact with the SQL database, an election occurs nominating a single delivery controller as master. All remaining controllers go into idle mode. A simple alphabetical order determines the winner of the election (based on alphabetical list of FQDN names of registered Delivery Controllers).

- Sizing – When using local host cache mode, a single delivery controller is responsible for all VDA registrations, enumerations, launches and updates. The elected controller must have enough resources (CPU and RAM) to handle the entire load for the zone. A single controller can scale to 10,000 users, which influences the zone design.

- RAM – The local host cache services can consume 2+GB of RAM depending on the duration of the outage and the number of user launches during the outage where

LocalDB service can use approximately 1.2 GB of RAM (up to 1 GB for the database cache, plus 200 MB for running SQL Server Express LocalDB).

The High Availability Service can use up to 1 GB of RAM if an outage lasts for an extended interval with many logons occurring - CPU – The local host cache can use up to 4 cores in a single socket. Combination multiple sockets with multiple cores should be considered to provide expected performance. Based on Citrix testing, a 2×3 (2 sockets, 3 cores) configuration provided better performance than 4×1 and 6×1 configurations.

- Storage – During local host cache mode, storage space increased 1MB every 2-3 minutes with an average of 10 logons per second. When connectivity to site database is restored the local database is recreated and the space is returned. However, the broker must have sufficient space on the drive where the LocalDB is installed to allow for the database growth during an outage. Extended I/O requirements during database outage should be considered as well.

- Power Options – Powered off virtual resources will not start when the delivery controller is in local host cache mode. Pooled virtual desktops that reboot at the end of a session are placed into maintenance mode.

- RAM – The local host cache services can consume 2+GB of RAM depending on the duration of the outage and the number of user launches during the outage where

- Consoles – When using local host cache mode, Studio and PowerShell are not available.

- VDI limits:

- In a single-zone VDI deployment, up to 10,000 VDAs can be handled effectively during an outage.

- In a multi-zone VDI deployment, up to 10,000 VDAs in each zone can be handled effectively during an outage, to a maximum of 40,000 VDAs in the site.

Monitoring

When preparing a dedicated template for XenDesktop 7.15 monitoring the event logs items listed in the table below should be considered.

Tests and Troubleshooting

Force an outage

You might want to force a database outage when

- If your network is going up and down repeatedly. Forcing an outage until the network issues resolve prevents continuous transition between normal and outage modes.

- To test a disaster recovery plan.

- While replacing or servicing the site database server.

To force an outage, edit the registry of each server containing a Delivery Controller.

- In HKLM\Software\Citrix\DesktopServer\LHC, set OutageModeForced to 1. This instructs the broker to enter outage mode, regardless of the state of the database. (Setting the value to 0 takes the server out of outage mode.)

- In a Citrix Cloud scenario, the connector enters outage mode, regardless of the state of the connection to the control plane or primary zone

Troubleshooting

As usual the main source of information about the status of Local Host Cache is the Windows Event Viewer. All actions done by LHC components are are logged to Windows Server Application log. The examples of the most important events are presented in figures below.

Delivery Controller

Event ID: 503 and 504 – LHC configuration change and update

Event ID: 503 – deatils

Event ID: 1201 and 3501 – Site database connection lost

Event ID: 1201 – deatils

Event ID: 3504 – deatils

Event ID: 3501 – deatils

Event ID: 3502 – deatils

Event ID: 3503 – Site database connection restore

Event ID: 3500 – Site database connection restore

VDA

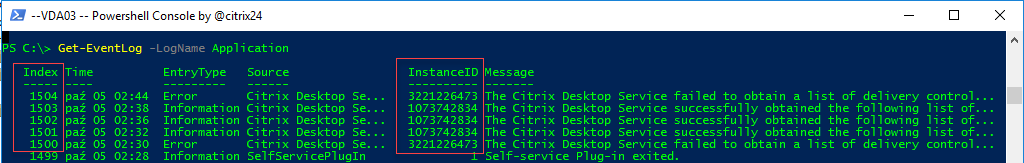

Event IDs in VDA are using slightly different notation. Although in event log viewer, event ids are displayed as 1001 and 1010 real values are stored as 1073742834 and 3221226473 respectively.

Event ID: 3500 / 1073742834 – details

Event ID: 3500 / 3221226473 – details

Nice piece of work

ReplyDeleteThanks for the comment. I am working in Powershell area now day, As you posted first comment which gave me lot of happiness. I will be happy to help in term of automation, Let me know if you need anything in terms of automation. I will be happy to build an script for you free. :)

DeleteReally great work.

ReplyDelete